I used to run 4 vms on my machine for my application testing. Once I discovered containers, I now run close to 40 or 50 on the very same machine. And, as opposed to 5 minutes that take to start a VM, I can start a container under a second. Would you like to know how?

Virtualization: Using virtual machines

I was using computers since 1982 or so. I came to Unix in around 84 and always was using them until 1997. I had to use Windows after that, because Linux did not have the tools needed for regular office work. Still, because I needed Linux, I turned to an early stage company called VMware.

Now, virtualization is a multi-billion dollar industry. Just about most datacenters virtualized their infrastructure: CPU’s, storage, and network. To support the machine level virtualization, technology developers are redefining the application stacks as well.

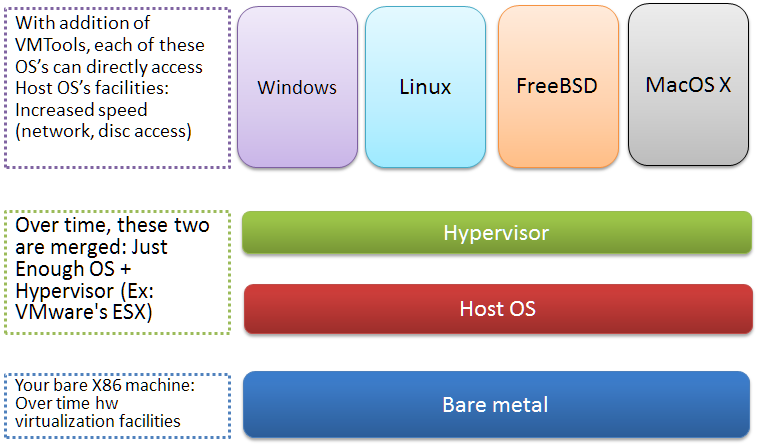

Let us see how Virtualization looks normally.

This kind of virtualization offers lot of advantages:

- You get your own machine, where you can install your own OS. While this level of indirection is costly, over the time several advances helped:

- You get root privileges, so you can install whatever you want. And, offer the services just like a physical machine would.

- Backup, restore, migrate, and other management facilities are easy to manage with standard tools. There are tools to do the same in physical machines as well, but they are expensive, and not so easily doable in self-service model.

But, let us look at the disadvantages also:

- It is expensive : If you just want to run a single application, it looks ridiculous to run an entire OS. We made tremendous progress in developing multi-user OS’es –- why are we taking a step back towards single user OSes?

- It is difficult to manage : It may be easier compared to managing a physical machine. But, if we are comparing to running an app, it is lot more complex. Imagine: you not only need to run the application, but the OS also.

To put it differently, let us take each of the advantages and see how they are meaningless in lot of situations:

- What if we don’t need to run different OS’es? The original reason for running different OS’es was to test apps on different OS’es (Windows came in many different flavors, one for each language, and with different patch levels).

- Now, client apps run on the web, a uniform platform. So, no need to test web apps on multiple OS’es.

- Server apps can and do depend not on OS, but a different packages. For instance, an application may run any version of LInux, as long as there is Python 2.7, with some specific packages.

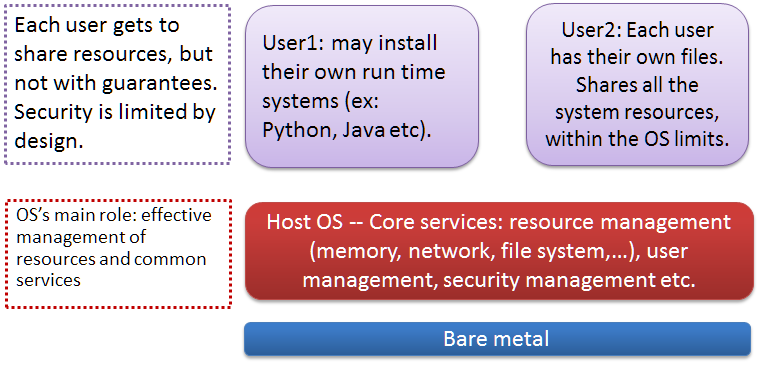

Virtualization: Multi-user operating systems

We have a perfect system to run such applications: [Unix](http://www3.alcatel- lucent.com/bstj/vol57-1978/bstj-vol57-issue06.html). It is a proven multi-user OS, where each user can run their own apps!

What is wrong with this picture? What if we want to run services? For example, if we want to run my own mail service? Web service? Without being root, we cannot do that.

While this is a big problem, you can easily solve it. Turns out that most of the services that you may want to run on your machine, mail, web, ftp, etc, can easily be setup as a virtual service, on the same machine. For instance, Apache can be setup to serve many named virtual hosts. If we can setup to provide each other control over their own virtual services, we are all set.

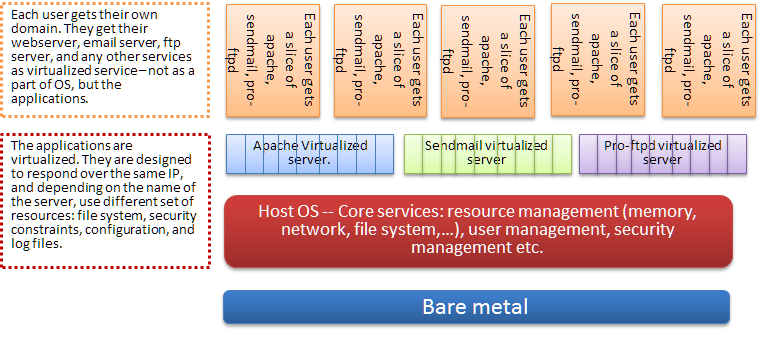

Virtualization: At application level

There are several companies that did exactly that. In the early days of the web, this was how they provided users their own services on the machine. In effect, users were sharing their servers – mail, web, ftp, etc. Even today, most of the static web hosting, or prepackaged web apps run that way. There is even a popular web application webmin (and virtualmin) that can let you manage the virtual services.

What is wrong with this picture? For the most part, for fixed needs, for fixed set of services, it works fine. Where it breaks down is the following:

- No resource limit enforcement : Since we are doing virtualization for each application, we have to depend on the good graces of the application to do the resource limit enforcement. If your neighbor subscribed to lot of mailing lists, your mail response will slow down. If you hog the CPU of the webserver, your neighbors will suffer.

- Difficulty of billing : Since metering is difficult, we can only do flat billing. The pricing does not depend on the resource consumption.

- Unsupported apps : If the application you are interested does not support this kind of virtualization, you cannot get the service. Of course, you have a choice of running the application in your own user space, with all the restrictions that come with it (example: No access to some range of ports).

- Lack of security : I can see what all apps all other users are running! Even if Unix itself is secure, not all apps may be secure. I may be able to peek into temp files, or even into memory of the other apps.

So, is there other option? Can we provide a level of control to the users where they can run their own services?

We can do that if the OS itself can be virtualized. That is, it should provide complete control to the users, without the costs of a VM. Can it be done?

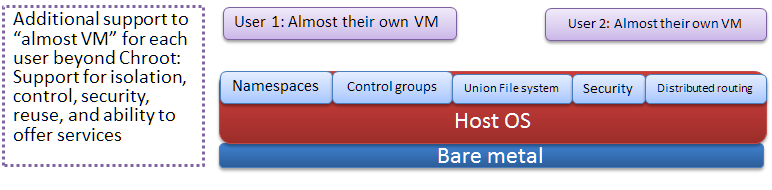

Virtualization: At OS level (Containers)

In early days, they had VPS (Virtual private servers), which did provided a limited bit of control. Over the years, this kind of support from OS has become more sophisticated. In fact, there are several options now that elevate the virtual private servers into containers , a very light weight alternative to VM’s.

If you want to see the results of such a setup, please see:

In the beginning there was chroot to create a “jail” for applications so that they do not see outside of that folder and subfolders is a popular technique to create a sandbox for applications. Features like cgroups have been added to Linux kernel to limit, account, and isolate resource usage to process groups. That is, we can designate a sandbox and its sub processes as a process group and manage them in that way. Lot more improvements which we will describe make the full scale virtualization possible.

Now, there are several popular choices for running these kind of sand boxes or containers: Solaris based SmartOS (Zones), FreeBSD Jails, Linux’s LXC, Vserver, and commercial offerings like Virtuozzo and so on. Remember that within that OS, the kernel cannot be changed by the container. It can, however, overwrite any libraries (see later about union file system).

In the Linux based open source world, there are two that are gaining in popularity: Openshift and Docker. It is the latter that I am fascinated with. Ever since dotcloud opensourced it, there were lot of enthusiasm about that project. We are seeing lot of tools, usability enhancements, and special purpose containers.

I am a happy user of docker. Most of what you want to know about docker, can be found at docker.io. I encourage you to play with docker – all you need a Linux machine (even a VM will do).

Technical details: How containers work

Warning: This is somewhat technical and as such, unless you are familiar with the way OS works, you may not find it interesting. Here are the core features of the technology (most of this information is taken from: Paas under the hood, by dotcloud).

Namespaces

Namespaces isolate resources of processes from each other (pid, net, ipc, mnt, and uts).

- Pid isolation means a processes residing in a namespace do not even see other processes.

- net namespace means that each container can bind to whatever port it wishes to. That means, port 80 is available to all containers! Of course, to make it accessible from outside, we need to do a little mapping – more later. Naturally, each container can have its own routing table, and iptables configuration.

- ipc isolation means that processes in a namespace do not even see other processes for IPC. It increases security and privacy.

- mnt isolation is something like chroot. In a namespace, we can have a completely independent mount points. The processes only see such file system.

- uts namespace lets each namespace have its own hostname.

Control groups (cgroups)

Control groups, originally contributed by google, lets us manage resource allocation for groups of processes. We can do accounting and resource limiting at group level. We can set the amount of RAM, swap space, cache, CPU etc. for each group. We also can bind a core to a group – a feature useful in multicore systems! Naturally, we can limit number of i/o ops or bytes read or written.

If we map a container to a namespace and the namespace to a control group, we are all set in terms of isolation and resource management.

AUFS (Another Union File System)

Imagine the scenario. You are running in a container. You want to use the base OS facilities: kernel, libraries -- except for one package, which you want to upgrade. How do you deal with it?

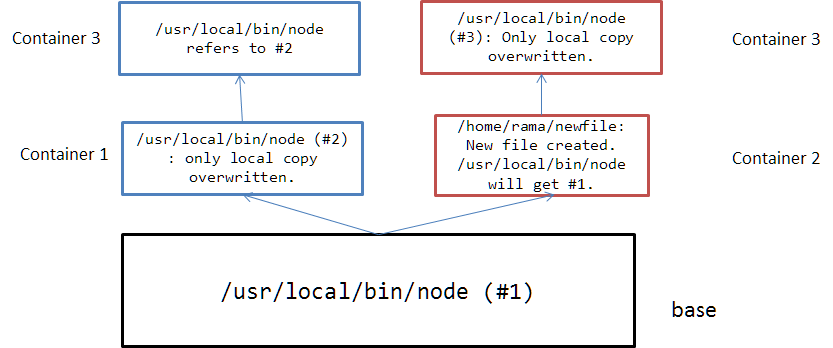

In a layered file system, you can only create what we want to. These files supersede the files in the base file system. And, naturally, other containers only see base file system and they too can selectively overwrite in their own file system space. All this looks and feels natural – everybody is under the illusion of owning the file system completely.

The benefits are several: storage savings, fast deployments, fast backups, better memory usage, easier upgrades, easy standardization, and ultimate control.

Security

Lot of security patches rolled into one called grsecurity offers additional security:

- buffer overflow attacks

- Separation of executable code and writable parts of the code

- Randomizing the address space

- Auditing suspicious activity

While none of them are revolutionary, taken together all these steps offer the required security between the containers.

Distributed routing

Let us suppose each person runs their own apache, on port 80. How do they expose that service on that port to outsiders? Remember that in the VM’s, you either get your own IP or you get to hide behind a NAT. If you have your own IP, you get to control your own ports etc.

In the world of containers, this kind of port level magic is bit a more complex. In the end, though, you can setup a bridge between the OS and the container so that the container can share the same internet interface, using a different IP (perhaps granted from DHCP source or, manually set).

A more complex case is, if you are running 100’s of containers, is there a way to offer better throughput for the service requests? Specifically, if you are running web applications? That question can be handled by using standard HTTP routers (like ngnix etc).

Conclusion

If you followed me so far, you learnt:

- What containers are?

- How do they logically relate to VM’s?

- How do they logically extend Unix?

- What the choices are?

- What are the technical underpinnings behind containers?

- How do we get started with containers?

Go forth and practice!